The process of identifying and choosing the appropriate temperature data for a specific location and time, such as in Oregon, is essential for various applications. This involves accessing weather records and selecting readings relevant to the area and timeframe of interest. For instance, if conducting an analysis of building energy consumption in Eugene, Oregon, one would need to pinpoint the specific temperature values corresponding to the periods when the building was in use.

Accurate temperature data is crucial for informed decision-making in several domains. In construction, it aids in determining appropriate building materials and insulation requirements. In agriculture, it informs irrigation schedules and planting times. In scientific research, it serves as a baseline for climate studies and weather pattern analysis. Historical temperature records provide valuable context for understanding long-term environmental changes and predicting future trends in the region.

Understanding this initial step is fundamental before delving into more complex topics such as statistical temperature analysis, climate modeling, or the impacts of environmental conditions on local ecosystems. Subsequent discussions will build upon this foundation to explore these areas in greater detail.

Considerations for Temperature Data Selection

Obtaining precise temperature information for Eugene, Oregon, requires careful consideration of several factors to ensure data accuracy and relevance for various applications.

Tip 1: Identify the Specific Data Source: Utilize reliable weather databases, such as those provided by the National Weather Service or accredited meteorological organizations. These sources undergo rigorous quality control measures, ensuring greater accuracy.

Tip 2: Define the Required Temporal Resolution: Determine the necessary time granularity of the data. Hourly readings are preferable for detailed analyses, whereas daily averages may suffice for broader assessments.

Tip 3: Account for Potential Data Gaps: Recognize the possibility of missing data points. Employ interpolation techniques or consult multiple sources to fill gaps effectively. Document any imputation methods utilized.

Tip 4: Verify Station Location Proximity: Select weather stations geographically close to the area of interest within Eugene. Temperature variations can occur even within relatively short distances due to localized microclimates.

Tip 5: Evaluate Data Consistency: Examine the data for anomalies or inconsistencies. Cross-reference data from different sources to validate temperature readings and identify potential errors.

Tip 6: Understand Measurement Unit Standardization: Ensure that temperature readings are presented in the desired unit (Celsius or Fahrenheit). Convert units appropriately to maintain consistency across analyses.

Tip 7: Document the Entire Data Acquisition Process: Maintain a comprehensive record of all data sources, selection criteria, and processing steps. This documentation enhances transparency and facilitates reproducibility.

By meticulously addressing these considerations, researchers, engineers, and policymakers can obtain reliable temperature data that contributes to sound decision-making related to energy management, agricultural practices, and climate resilience in the Eugene, Oregon area.

Adhering to these guidelines ensures a solid foundation for more advanced analyses and informed strategic planning within the region.

1. Data Source Reliability

The reliability of the data source is paramount when selecting temperature information for Eugene, Oregon. Inaccurate or unreliable data can lead to flawed analyses and misinformed decisions across various sectors. The selection process, therefore, hinges on identifying and utilizing sources with established quality control procedures and proven track records. The National Weather Service, for example, maintains a network of calibrated sensors and employs rigorous validation protocols. Selecting data from such a source minimizes the risk of errors stemming from faulty equipment or inconsistent measurement practices. Conversely, relying on unverified or crowd-sourced data may introduce significant uncertainties that compromise the integrity of any subsequent analyses. Erroneous temperature readings can have far-reaching consequences, affecting resource allocation, public safety measures, and long-term planning efforts.

Practical applications of reliable temperature data are widespread in Eugene. For instance, local utility companies rely on historical temperature trends to forecast energy demand and optimize power grid operations. Civil engineers utilize validated temperature records to design infrastructure resilient to extreme weather events. Agricultural researchers leverage accurate temperature data to model crop yields and develop strategies for mitigating the impacts of climate variability on local farming practices. In each of these scenarios, the credibility of the temperature information is directly linked to the effectiveness and accuracy of the resulting outcomes.

In summary, ensuring the reliability of the data source is an indispensable step in the broader process of selecting temperature data for Eugene, Oregon. While the availability of diverse data sources has increased in recent years, careful scrutiny and validation are essential to mitigate the potential for errors and ensure the integrity of analyses informed by this information. The challenges associated with data reliability underscore the need for adherence to established protocols and the prioritization of trusted sources in any application requiring accurate temperature readings.

2. Temporal Resolution

Temporal resolution, in the context of selecting temperature data for Eugene, Oregon, refers to the frequency at which temperature measurements are recorded. It directly influences the granularity and precision of subsequent analyses. High temporal resolution, such as hourly or sub-hourly measurements, offers a detailed view of temperature fluctuations throughout the day. This level of detail is vital for applications requiring a nuanced understanding of temperature variations, such as building energy modeling or localized climate studies. Conversely, lower temporal resolution, such as daily or monthly averages, provides a broader overview but may mask short-term temperature extremes or variations. The selection of an appropriate temporal resolution is therefore crucial, and must align with the specific requirements of the application. A mismatch between temporal resolution and analytical needs can lead to inaccurate conclusions or ineffective interventions. For example, using daily average temperatures to assess the impact of short-duration heatwaves on vulnerable populations would likely underestimate the true extent of the health risks involved.

The impact of temporal resolution is evident in various practical scenarios in Eugene. In agricultural planning, hourly temperature data aids in optimizing irrigation schedules and predicting crop development stages, taking into account the daily cycle of temperature changes. Similarly, for managing energy demand during peak periods, utilities rely on high-resolution temperature data to anticipate fluctuations in electricity consumption driven by air conditioning usage. Conversely, for long-term climate trend analyses, monthly or annual temperature averages may suffice. The choice of temporal resolution directly affects the computational resources required for data processing and storage. Handling high-resolution datasets demands greater storage capacity and processing power. The balance between temporal resolution and resource constraints should be carefully considered.

In summary, the selection of appropriate temporal resolution is a critical consideration in selecting temperature data for Eugene, Oregon. It significantly influences the level of detail captured, the accuracy of subsequent analyses, and the computational resources required. Understanding the trade-offs associated with different temporal resolutions is essential for ensuring that the selected data is fit for purpose and contributes to informed decision-making across a range of applications. The challenges in managing and analyzing high-resolution temperature datasets highlight the need for appropriate infrastructure and data management strategies to fully leverage the potential benefits of granular temperature information.

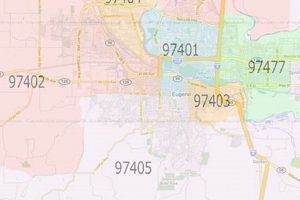

3. Geographic Proximity

Geographic proximity serves as a critical determinant in the accurate selection of temperature data relevant to Eugene, Oregon. Temperature variations are influenced by factors such as elevation, proximity to water bodies, urban heat island effects, and local topography. Therefore, selecting temperature readings from a distant weather station may not accurately reflect conditions within Eugene. For instance, a station situated in the foothills surrounding Eugene will likely register lower temperatures compared to one located in the city center, particularly during clear, calm nights. The further the distance between the measurement location and the target area, the greater the potential for discrepancies. This necessitates a careful evaluation of available weather stations and the selection of those most representative of the specific microclimate within Eugene.

Real-world applications illustrate the practical significance of geographic proximity. In urban planning, inaccurate temperature data could lead to inadequate building design and inefficient energy consumption. If infrastructure is planned based on temperature data collected far outside of Eugene, cooling systems might be incorrectly sized, resulting in occupant discomfort and increased energy costs. In agricultural contexts, using temperature readings from a distant location could lead to flawed irrigation scheduling, impacting crop yields and water usage. Similarly, public health initiatives that address heat-related illnesses rely on localized temperature data to accurately assess risk and implement appropriate interventions. Ignoring the factor of geographic proximity could result in underestimation of heat risks in certain areas of Eugene and overestimation in others, leading to inefficient resource allocation and potentially inadequate protection of vulnerable populations.

In summary, the concept of geographic proximity forms a cornerstone of accurate temperature data selection for Eugene, Oregon. Its impact stretches across urban planning, agriculture, public health, and various other sectors. Understanding and addressing the challenges associated with localized temperature variations are crucial to ensure that decisions are based on reliable and representative data. This understanding fosters greater precision in resource allocation, risk assessment, and the development of effective strategies for mitigating the impacts of weather and climate on the region.

4. Data Consistency

Data consistency is a fundamental requirement in the accurate selection of temperature data for Eugene, Oregon. It refers to the uniformity and reliability of temperature readings across different sources and time periods. Inconsistent data introduces errors that can propagate through subsequent analyses, undermining the validity of conclusions drawn. For example, if temperature readings for a specific date in Eugene vary significantly between the National Weather Service and a local amateur weather station, a thorough investigation is required to determine the source of the discrepancy. Possible causes include sensor malfunction, calibration errors, or differences in measurement methodologies. Failure to address such inconsistencies can lead to skewed averages, inaccurate trend estimations, and flawed decision-making in areas such as energy management, agricultural planning, and public health initiatives.

The practical significance of data consistency is evident in numerous applications. Consider a scenario where researchers are investigating the impact of climate change on tree growth in the Eugene area. If the temperature data used for the analysis contains inconsistencies, the resulting correlation between temperature and tree growth might be spurious, leading to misguided conclusions about the effects of climate change. Similarly, if a construction company relies on inconsistent temperature data to determine appropriate building materials, the structure might be vulnerable to temperature-related damage, resulting in costly repairs and potential safety hazards. Ensuring data consistency often involves cross-referencing multiple data sources, applying statistical techniques to identify outliers, and implementing quality control measures to detect and correct errors. Data validation and error correction methodologies become indispensable for ensuring consistent readings. These methodologies should be a part of a standard operating procedure used during data collection or selection.

In summary, data consistency is a critical component of selecting temperature data for Eugene, Oregon, impacting the reliability of analyses and the effectiveness of decisions across various sectors. Addressing data inconsistencies requires rigorous validation procedures, cross-referencing multiple sources, and understanding potential sources of error. While achieving perfect consistency may be challenging, prioritizing data quality and implementing robust validation protocols is essential for minimizing errors and ensuring the integrity of temperature-dependent analyses. The implementation of a comprehensive data quality framework, including consistency checks, is essential for accurate assessment and informed action based on temperature data in the region.

5. Unit Standardization

Unit standardization forms a crucial but often overlooked component of accurate temperature data selection for Eugene, Oregon. Temperature readings can be expressed in either Celsius (C) or Fahrenheit (F), and inconsistencies in unit representation can lead to significant errors in analysis and decision-making. Selecting temperature data without ensuring unit standardization is akin to comparing apples and oranges, rendering any subsequent comparisons or calculations meaningless. The conversion between Celsius and Fahrenheit is not a simple linear scaling but rather involves both scaling and an offset, amplifying the potential for miscalculations if unit standardization is neglected. A seemingly minor oversight in this aspect can have cascading effects, jeopardizing the validity of research findings, engineering designs, and public safety measures.

In practical terms, consider a civil engineering project in Eugene where temperature data is used to assess the thermal expansion and contraction of bridge components. If some data is expressed in Celsius and other data in Fahrenheit, and this difference is not accounted for, the calculated expansion and contraction values will be incorrect, potentially leading to structural instability. Similarly, in agricultural applications, irrigation schedules based on incorrectly converted temperature data can lead to over- or under-watering of crops, impacting yields and water conservation efforts. The National Weather Service typically provides data in both Celsius and Fahrenheit, but the user must still verify the units before incorporating the data into any analysis. Failing to do so represents a fundamental flaw in the data selection process. Therefore, accurate unit standardization is crucial when incorporating the temperature data into an article about “Select Temp Eugene Oregon”.

In summary, unit standardization is not merely a technical detail but a critical prerequisite for sound temperature data selection in Eugene, Oregon. Neglecting this aspect can lead to significant errors with real-world consequences across diverse sectors. The challenge lies not only in recognizing the importance of unit standardization but also in implementing robust procedures to ensure that all data is expressed in a consistent unit system. This requires careful attention to metadata, data validation techniques, and a thorough understanding of the implications of unit conversion on subsequent analyses. Emphasizing proper unit standardization practices promotes accuracy and enhances the reliability of temperature-dependent applications within the region.

6. Data Gap Handling

The proper handling of data gaps constitutes a critical element in the accurate selection of temperature data for Eugene, Oregon. Gaps in temperature records, arising from sensor malfunctions, communication failures, or data storage issues, can introduce bias and uncertainty into analyses, jeopardizing the reliability of derived insights. Addressing these gaps requires employing appropriate imputation techniques to fill in missing values based on available data. Ignoring such gaps or using inappropriate methods can lead to skewed temperature averages, inaccurate trend estimations, and compromised decision-making in areas dependent on precise temperature information. For example, a missing temperature reading during a heatwave event, if not properly accounted for, could lead to an underestimation of the event’s severity, impacting public health emergency responses.

Several methodologies exist for handling temperature data gaps, each with its own assumptions and limitations. Simple interpolation methods, such as linear interpolation, estimate missing values based on adjacent data points. More sophisticated approaches, such as kriging or machine learning models, can leverage spatial and temporal correlations to provide more accurate imputations. The choice of imputation method should be guided by the characteristics of the data and the specific objectives of the analysis. For instance, in agricultural applications, data gaps during critical growth stages may require more sophisticated imputation methods that consider factors such as solar radiation and soil moisture. Furthermore, transparency in documenting data gap handling methods is essential for ensuring reproducibility and accountability in temperature data applications in the Eugene area.

In summary, proper data gap handling is an indispensable element in the accurate selection and utilization of temperature data for Eugene, Oregon. Addressing data gaps through appropriate imputation techniques minimizes bias and uncertainty, ensuring the reliability of subsequent analyses. While several imputation methodologies exist, the choice should be guided by the specific data characteristics and analytical goals. Moreover, transparent documentation of data gap handling methods promotes reproducibility and enhances the credibility of findings. The effective management of data gaps thus forms a cornerstone of responsible and reliable temperature data applications, contributing to informed decision-making and effective resource management in the region.

7. Metadata Documentation

Metadata documentation is indispensable when selecting temperature data for Eugene, Oregon. It provides crucial contextual information, ensuring appropriate data usage and interpretation. Without comprehensive metadata, the value and reliability of the selected temperature data are substantially diminished, potentially leading to inaccurate analyses and misinformed decisions.

- Data Source Identification

Metadata documentation clearly identifies the source of the temperature data, including the organization responsible for collection and maintenance. This allows users to assess the source’s credibility and the quality control procedures employed. For instance, knowing that temperature readings originate from the National Weather Service, rather than an unverified source, increases confidence in data reliability. This identification directly informs the decision to select specific temperature data for Eugene, Oregon, enabling prioritization of trusted sources.

- Sensor Specifications and Calibration

Metadata should detail the type of sensor used to measure temperature, along with its calibration history. Different sensor types possess varying levels of accuracy and are subject to specific biases. Understanding sensor specifications allows users to account for potential measurement errors and choose data that meets the required precision. Regular calibration ensures that the sensor remains accurate over time. This information is crucial when selecting temperature data for Eugene, Oregon, as it directly impacts the validity of analyses.

- Measurement Procedures and Protocols

Metadata should outline the specific measurement procedures followed, including sensor placement, data collection frequency, and any averaging or processing applied. Variations in measurement protocols can significantly influence temperature readings. For example, the height at which temperature is measured above the ground affects the recorded value. Adherence to standardized protocols enhances data comparability across different locations and time periods. These protocol details are essential when selecting and comparing temperature data for Eugene, Oregon, ensuring consistency in methodology.

- Data Quality Flags and Limitations

Metadata documentation provides information about data quality issues, such as missing values, outliers, or suspected errors. Data quality flags indicate which data points should be treated with caution or excluded from analysis. Understanding data limitations is crucial for avoiding misinterpretations and ensuring that analyses are based on reliable information. When selecting temperature data for Eugene, Oregon, reviewing these quality flags allows for informed decisions about data usability.

The aforementioned facets highlight the integral role of metadata documentation in ensuring the proper selection and utilization of temperature data for Eugene, Oregon. Neglecting metadata documentation can lead to critical errors and undermines the trustworthiness of any conclusions drawn from the data. Therefore, a thorough assessment of metadata is a prerequisite for responsible data selection, contributing to better-informed decisions in various sectors such as agriculture, urban planning, and climate research.

Frequently Asked Questions

This section addresses common inquiries regarding the process of selecting appropriate temperature data for the Eugene, Oregon, area. The following questions and answers provide clarity on critical aspects of data acquisition and utilization.

Question 1: What primary sources offer reliable temperature data for Eugene, Oregon?

The National Weather Service (NWS) and accredited meteorological organizations provide the most reliable temperature records. Data from these sources undergo rigorous quality control procedures.

Question 2: What temporal resolution is recommended for detailed building energy consumption analyses?

Hourly temperature readings are preferable for detailed analyses of building energy consumption. This resolution captures short-term temperature fluctuations affecting energy demand.

Question 3: How should missing temperature data points be addressed?

Data gaps should be addressed using interpolation techniques or by consulting multiple data sources. Any imputation methods used should be meticulously documented.

Question 4: How does geographic proximity impact the selection of a weather station?

Weather stations should be geographically close to the area of interest within Eugene. Localized microclimates can cause temperature variations, even over relatively short distances.

Question 5: Why is unit standardization essential for temperature data analysis?

Temperature data must be standardized to a consistent unit (Celsius or Fahrenheit). Inconsistent units introduce significant errors in subsequent calculations and comparisons.

Question 6: What role does metadata documentation play in data selection?

Metadata documentation provides essential information about data sources, measurement procedures, and data quality, enabling informed decisions about data usability and reliability.

Accurate temperature data selection is crucial for informed decision-making in various applications, including construction, agriculture, and scientific research. Adherence to best practices ensures data integrity and contributes to sound strategic planning.

The next section will delve into specific applications of accurate temperature data in the Eugene, Oregon, area.

Conclusion

The preceding exploration of “select temp eugene oregon” has underscored the multifaceted nature of acquiring and utilizing accurate temperature data for the region. Attention to data source reliability, temporal resolution, geographic proximity, data consistency, unit standardization, data gap handling, and metadata documentation is paramount. Failure to address these considerations introduces uncertainty and undermines the validity of analyses reliant on temperature information.

Continued adherence to rigorous data selection protocols and diligent documentation practices is crucial for informed decision-making across diverse sectors in Eugene, Oregon. Prioritizing data integrity is essential for effective resource management, infrastructure planning, and climate resilience initiatives, thereby safeguarding the region’s long-term sustainability.